I Over Engineered my Blog in Rust with Serverless

Introduction

Building a blog offers various options, each with its own trade-offs. You could opt for user-friendly platforms like Medium, choose feature-rich, self-hosted solutions like WordPress that offer extensive ecosystems but come with performance and maintenance considerations, or select a static site generator for simplicity and speed. At first glance, this site might appear to be just another static site, but appearances can be deceiving.

When I started this project, I had a set of objectives: to build a blog using Rust, ensure high performance, keep costs minimal, and establish patterns I want to use for future projects. The initial iteration met some of these requirements, but from the start, I knew I needed to set something up and iterate from there. What the first iteration was, was a blog built in Rust, with little to no cost. However, it was rough, not extensible, and faltered in performance on constrained networks, whether due to high latency or the downloading of 400kb WebAssembly blobs with limited throughput. The solution, while acceptable, could be significantly improved.

So, I'm not completely rewriting my blog, but it needs a substantial refactor. I have set the following requirements:

- Continue using Rust and Yew.

- Ensure the site is dynamic; I don’t want to redeploy every time I post a new article.

- The solution must be performant, utilizing server-side rendering (SSR) while returning responses quickly.

- The site must be environmentally conscious; there's no value in running a small blog that requires a server to run 24/7.

- The site should scale, as you never know what load your site might come under.

- The site should be cost-effective to run.

These are a challenging set of requirements. How do you build something performant, using SSR, and yet not have a server running 24/7? And how can it cost very little while also being able to update without a new deployment every time? Fortunately, I have a plan.

The Approach

Server-side rendering that's dynamic without constantly running a server? It seems like conflicting requirements, but maybe not. One technology that has been around for some time, yet is often underutilized, is serverless computing. Serverless is a method of providing compute resources on-demand. At a very high level, you deploy a function in a serverless environment, and whenever a request is made, an instance of that function fires up, executes the code for the request, and then shuts down. This approach allows for sharing compute resources among multiple functions without needing to run them 24/7.

Infrastructure

Serverless computing has its benefits and drawbacks. It's not a perfect solution, but it helps meet the requirements for this blog. For this project, I am using AWS Lambda, one of the many Functions as a Service (FaaS) platforms available, and one I’m familiar with. Lambda is highly scalable, capable of spinning up thousands of concurrent executions, and cost-effective, especially with AWS’s generous free tier offering ample free compute for a small website. Additionally, it supports Rust without requiring a WebAssembly layer!

However, AWS Lambda has its limitations. Many are familiar with the issue of cold starts, where the lambda function, if not invoked recently or if the previous instance has expired, must be completely initialized upon the next execution. This can lead to significant wait times in some languages, with delays of several seconds before the code even begins execution. Thankfully, Rust experiences relatively brief cold starts, typically under 100ms.

The code

Infrastructure is great, but we need some code to actually do what we need it to do. Thankfully, there wasn’t much that I needed to change from the original implementation. Ninety percent of the viewable elements did not need any changes. However, the methods used to run, render, and retrieve data would no longer work.

Supporting AWS Lambda

In updating this site, I wanted it to render on AWS Lambda, but I also wanted to ensure that it could still run on the client if needed. This required making the code extendable. To start, a new Server module was necessary to handle execution from AWS Lambda, allowing separation of server code and render code. The idea was to enable the view to be used by another renderer with little to no change. This additional module is actually straightforward to start with. There is a tool called Cargo Lambda that allows us to build and run on AWS Lambda natively. It even provides the ability to run the lambda code locally, simplifying the development process. Then, all you need to do is set up your entry point.

Cargo Lambda relies on two crates: lambda-runtime and tokio. As we are writing a web application, we will also use lambda-http. Then, all we need is the entry point for our lambda:

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Level::INFO)

.with_target(true)

.without_time()

.with_line_number(true)

.init();

info!("Initialising Lambda");

run(service_fn(move |event| {

function_handler(

event

)

}))

.await

}

async fn function_handler(

event: Request,

) -> Result<Response<Body>, Error> {

Response::builder()

.status(200)

.body("".into())

.map_err(Box::new)?

}

This setup is rather straightforward. The main function acts as the constructor for the lambda. All initial setup is done here and executes only once while the lambda instance remains available. We set up some logging so that our logs will end up in CloudWatch. In this case, any time an event is sent to the lambda, it is passed to the function_handler, where processing for this event occurs. Currently, if we deploy and make a request, we will get a 200 OK with no content, as defined by the function provided. However, for a blog, we want some content, so what's next?

Let's add the renderer to the function_handler, leaving hydratable as false since we are only rendering on the server for now:

let render_result = ServerRenderer::<ServerApp>::with_props(move || ServerAppProps {})

.hydratable(false)

.render().await;

And add our first Component

#[derive(Clone, PartialEq, Properties)]

pub struct ServerAppProps {}

#[function_component]

pub fn ServerApp(props: &ServerAppProps) -> Html {

html!(

)

}

Great, we now have an AWS Lambda that can render something. However, ignoring that our ServerApp contains nothing to render, if the user calls our endpoint, they will still get an empty response. Let's fix that. First, we need to create an index file. This will act as the root of our app and will have a placeholder for our content:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

</head>

<body>

<!--%BODY_PLACEHOLDER%-->

</body>

</html>

We are working with a Lambda function, which means we don’t have a traditional file system at our disposal. For the sake of this example, let's assume we have a mechanism to load the HTML template into memory using a function called load_index.

Next, we update our function handler as follows:

async fn function_handler(

event: Request,

) -> Result<Response<Body>, Error> {

let render_result = ServerRenderer::<ServerApp>::with_props(move || ServerAppProps {})

.hydratable(false)

.render().await;

let index = load_index()

let body = index.replace("<!--%BODY_PLACEHOLDER%-->", &render_result);

Response::builder()

.status(200)

.header("content-type", "text/html")

.body(body.into())

.map_err(Box::new)?

}

With these changes, we now have a Lambda function that can handle a request and render some content. From here, we could take the URI from the event and feed it to our implementation of the view. However, this leads us to one glaring issue: handling environment-specific implementations, which will be the topic of our next discussion.

Using Services in Yew

This is probably the area where I spent the most time experimenting with different solutions, and it’s also where I feel I'm going against the conventional approach. Many developers use feature flags to handle the differences between client-side and server-side implementations. Typically, this logic is embedded within the component that requires it: on the client side, you might make an HTTP request, while on the server side, you retrieve the content from a source like S3 or DynamoDB. This approach is practical, but I prefer a different pattern. Coming from a Java background, I favor abstracting logic as much as possible to reduce duplication and allow components to focus on their primary responsibility. Why should our article renderer need to know where the article originated? It should simply have a mechanism to retrieve the content it needs and then render it accordingly.

In Java, we often use Services with Interfaces, which define the contract for how requests are made and allow one or more services to implement the logic. Rust offers a similar capability through traits, which facilitate polymorphism – the ability to change the implementation at compile-time or runtime, depending on the use case. This is akin to dynamic dispatch in Rust, which allows for runtime polymorphism. I aim to define services that implement the logic I need, providing the implementation based on the given environment and configuration. Let's consider a simplified example.

Initially, I tried something like this, intending to pass this trait to the view, which is then shared with components. Props in Yew have specific requirements: they must be clonable, must implement PartialEq, and must implement Properties.

pub trait ConfigProvider: Clone + PartialEq {

fn get_variable(&self, id: &str) -> Result<String, String>;

}

#[derive(Clone, PartialEq, Properties)]

pub struct ServerAppProps {

config_provider: Arc<Mutex<dyn ConfigProvider>>,

}

However, this code results in a compilation error:

error[E0038]: the trait `renderer::ConfigProvider` cannot be made into an object

--> server/src/renderer.rs:111:32

|

111 | config_provider: Arc<Mutex<dyn ConfigProvider>>,

| ^^^^^^^^^^^^^^^^^^ `renderer::ConfigProvider` cannot be made into an object

|

note: for a trait to be "object safe" it needs to allow building a vtable to allow the call to be resolvable

dynamically; for more information visit <https://doc.rust-lang.org/reference/items/traits.html#object-safety>

--> server/src/renderer.rs:105:35

|

105 | pub trait ConfigProvider: Clone + PartialEq {

| -------------- ^^^^^^^^^ ...because it uses `Self` as a type parameter

| |

| this trait cannot be made into an object...

The issue arises due to Rust's requirements for a trait to be object safe. Specifically, for a trait to be considered object safe, it must adhere to certain rules, including:

- All methods on a trait must have self, &self, or &mut self as a parameter, or alternatively, they must be explicitly marked with where Self: Sized.

- No methods should take in Self as a parameter.

At first glance, this might seem confusing. The distinction between self and Self is crucial: to be object safe, a trait must allow the compiler to determine the concrete types of all relevant methods at compile time, facilitating the construction of a vtable for dynamic dispatch and ensuring memory safety. Self refers to the concrete type implementing the trait, whereas self refers to the instance of that type. When a method in a trait uses Self as a parameter or return type, it prevents the trait from being used as a trait object, because the concrete type Self is not known at compile time.

Resolving Object Safety and Trait Implementation

We encounter a dilemma where the props must implement Clone and PartialEq, but the trait itself cannot, leading to a sort of chicken-and-egg situation. There are a couple of paths forward:

- Manual Implementation: Manually implement Clone and PartialEq on the props. While feasible, this approach can be laborious, especially with complex views, and it restricts the direct use of these services in context, which we'd prefer to avoid.

- Trait Wrapping: The alternative, and the one I opted for, involves wrapping our traits. We can hide them from the props' view and provide a service that encapsulates our provider. This method effectively abstracts the complexities and meets our requirements.

I started by defining base Provider and Service traits to ensure they meet basic requirements:

pub trait Service: Send + Sync + Deref {

fn get_id(&self) -> &Uuid;

}

impl PartialEq for dyn Service<Target = Box<dyn Provider>> {

fn eq(&self, other: &Self) -> bool {

self.get_id().eq(other.get_id())

}

}

pub trait Provider: Send + Sync {

fn get_id(&self) -> &Uuid;

}

This setup ensures that:

- All Providers offer a unique ID.

- All Services implement PartialEq based on the Provider's ID.

- Both Providers and Services are thread-safe, as ensured by Send + Sync.

With the basic traits defined, we proceed to specify our ConfigProvider trait:

pub trait ConfigProvider: Provider + CloneConfigProvider {

fn get_variable(&self, id: &str) -> Result<String, String>;

}

pub trait CloneConfigProvider {

fn clone_box(&self) -> Box<dyn ConfigProvider>;

}

impl<T> CloneConfigProvider for T

where

T: 'static + ConfigProvider + Clone,

{

fn clone_box(&self) -> Box<dyn ConfigProvider> {

Box::new(self.clone())

}

}

This code snippet defines the ConfigProvider trait. The pattern used here is replicated across all providers, regardless of whether they're for configuration, document retrieval, or any other service. Although somewhat verbose, this implementation serves its purpose effectively. The next step would be to create various implementations of these providers, such as one for retrieving configuration from the environment, with the flexibility to add more providers as needed to accommodate different requirements.

Managing Services in Yew

Dealing with configuration and services in Yew required a thoughtful solution to avoid common pitfalls like prop drilling and global static access. Here's how I navigated these challenges:

Prop drilling can be cumbersome, especially when a service is needed across many components. It leads to a tangled web of dependencies and complicates the component structure. On the other hand, while global static access, achievable with libraries like lazy_static, offers simplicity, it tends to lead to design issues, such as unclear variable purposes and inconsistent usage paths.

I chose Yew Context to manage service access across components. Yew Context, similar to global static variables but with key distinctions, provides a more explicit definition and scoped access, reducing potential misuse. It aligns with Yew's component architecture, allowing services to be accessible only where needed.

Here's how I implemented the EnvironmentConfigProvider, which retrieves configuration from the environment:

#[derive(Clone, PartialEq)]

pub struct EnvironmentConfigProvider {

id: Uuid,

}

impl EnvironmentConfigProvider {

pub fn new() -> EnvironmentConfigProvider {

EnvironmentConfigProvider { id: Uuid::new_v4() }

}

}

impl Provider for EnvironmentConfigProvider {

fn get_id(&self) -> &Uuid {

&self.id

}

}

impl ConfigProvider for EnvironmentConfigProvider {

fn get_variable(&self, id: &str) -> Result<String, String> {

info!("Retrieving env value for : {}", id);

let _result = env::var(id);

_result.map_err(|e| format!("Failed to retrieve variable: {}, Error: {}", id, e))

}

}

The context is then utilized in components, providing a clean and efficient way to access services like configuration and document retrieval:

#[derive(Clone, PartialEq, Properties)]

pub struct ViewProps {

pub config_service: ConfigService,

...

}

#[function_component]

pub fn View(props: &ViewProps) -> Html {

html! {

<>

<ServiceContext config_service={props.config_service.clone()}>

<NavBar/>

<Home/>

</ServiceContext>

</>

}

}

#[derive(Clone, PartialEq, Properties)]

pub struct ContextProps {

pub config_service: ConfigService,

pub children: Children,

}

#[function_component]

pub fn ServiceContext(props: &ContextProps) -> Html {

html!(

<ContextProvider<ConfigService> context={props.config_service.clone()}>

{&props.children}

</ContextProvider<ConfigService>>

)

}

For asynchronous operations, such as fetching documents from a service, Yew's use_future hook and async traits are utilized to manage the operation without blocking the main thread:

pub trait DocumentProvider: Provider + CloneDocumentProvider {

async fn get_document(&self, id: String) -> Result<Option<String>, String>;

}

pub struct DocumentService {

pub inner: Box<dyn DocumentProvider>,

}

...

#[hook]

fn use_external_source_with(

id: &String,

) -> SuspensionResult<UseFutureHandle<Result<Option<String>, String>>> {

let context: DocumentService = use_context::<DocumentService>().unwrap();

use_future(move || async move { context.deref().get_html_for_input(id.clone()).await })

}

...

#[derive(Clone, PartialEq, Properties)]

pub struct ContentProps {

pub id: String,

}

#[function_component]

pub fn Content(props: &ContentProps) -> HtmlResult {

let res = use_external_source_with(&props.id)?;

let ref content_raw_res = *res;

match content_raw_res {

Ok(Some(content)) => {

Ok(html!(content.clone()))

}

Ok(None) => {

Ok(html!(<h1>{"404 Not Found"}</h1>))

}

Err(e) => Ok(Html::from(e)),

}

}

In this example, use_document_provider utilizes the use_future hook to asynchronously fetch document content based on an ID, while Content uses this hook to render the fetched content or display a 404 message if the content is not found.

SuspensionResult plays a critical role in managing asynchronous state transitions in the component lifecycle, providing seamless integration of asynchronous data fetching with Yew's rendering engine. It allows for dynamic content loading and updating, ensuring that the user experience remains fluid and responsive while data is being retrieved.

In Yew, Suspension facilitates handling asynchronous operations differently based on the rendering environment—server-side or client-side—which is essential for a seamless and optimized user experience.

- Server-side Rendering: On the server,

Suspensionensures that all asynchronous operations are completed before the page is rendered. This behavior is crucial for SEO and performance, as it ensures that the server sends a fully rendered HTML page to the client. This approach avoids incomplete or loading states being rendered in the initial HTML, providing the complete content on the first load. - Client-side Rendering: On the client side,

Suspensionallows components to render immediately, even if the data they depend on hasn't been fetched yet. This non-blocking behavior is typical in client-side applications, where parts of the UI can render or show loading states independently while data is being fetched asynchronously. Yew's suspension system can provide placeholders or loading indicators, enhancing the user experience by making the application feel more responsive and dynamic.

Analyzing the Migration to Serverless

The journey from a static provider model to a bespoke serverless solution turned out to be more complex than anticipated. It involved extensive experimentation to discern effective strategies and approaches. The core of this transition lies in understanding the impact on performance and user experience.

Initial Implementation Drawbacks

The journey from a static provider model to a bespoke serverless solution turned out to be more complex than anticipated. It involved extensive experimentation to discern effective strategies and approaches. The core of this transition lies in understanding the impact on performance and user experience. Initial Implementation Drawbacks

The original site, while free to host, faced significant accessibility and speed issues:

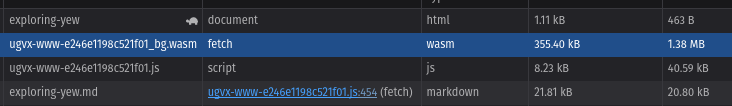

- Heavy reliance on WebAssembly: Although Rust-compiled WebAssembly can be performant, the initial site setup led to a slow rendering process, starting from fetching the root HTML to executing the WebAssembly binary and loading content.

- Large binary size: A notable drawback of using Rust in WebAssembly is the large binary size, with the site's binary being 355kB, which is substantial compared to typical JavaScript files.

- Serial loading process: The loading sequence involved multiple large requests, resulting in a slow, linear progression that hindered the overall user experience.

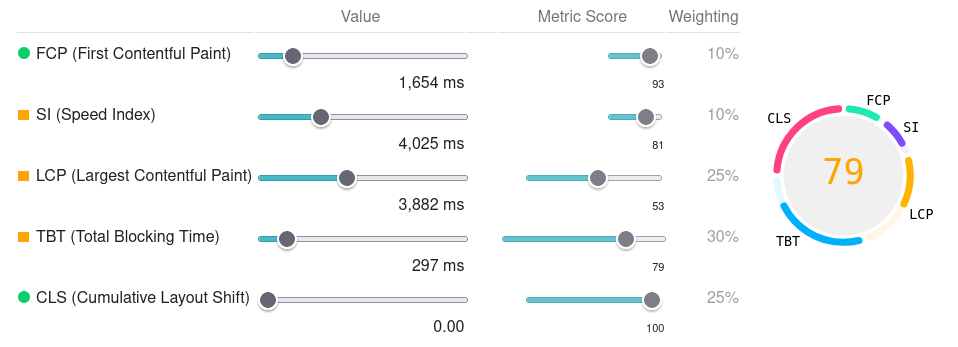

Using Google's PageSpeed Insights for analysis, several key performance metrics were highlighted:

- First Contentful Paint (FCP): The initial render time was slower than desired, impacting the user's first impression of the site.

- Largest Contentful Paint (LCP): The time taken to load the main content was 3.9 seconds, which is considered excessive, indicating the inefficiency of the initial solution in content delivery.

- Total Blocking Time (TBT) and Speed Index: These metrics further underscored the need for optimization, with a blocking time of 300ms and a speed index of 4 seconds, both of which point towards a less than optimal performance.

The initial site's performance, particularly in non-ideal conditions such as on mobile devices with slow internet connections, highlighted the limitations of the static, WebAssembly-based approach. The transition to a serverless architecture aimed to address these issues, promising improved loading times and a better overall user experience. The next steps would involve detailing the improvements achieved through the serverless solution, emphasizing the enhanced performance metrics and user experience.

Serverless Implementation Analysis

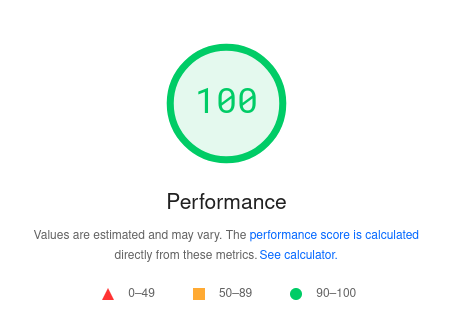

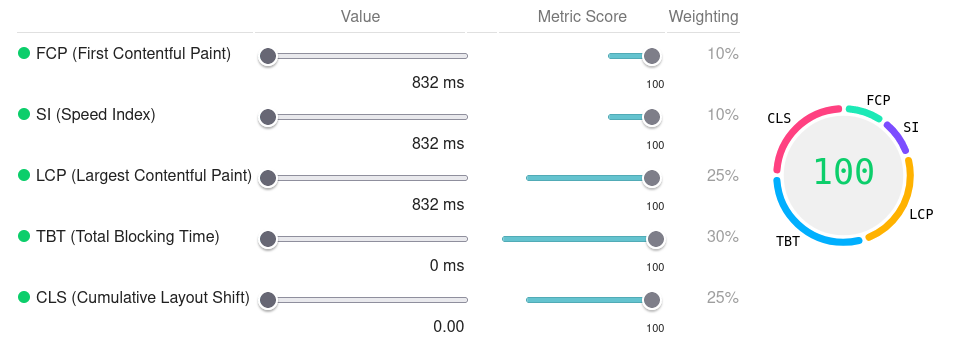

The transition to a serverless architecture marked a significant improvement in performance metrics. Let’s delve into how the new implementation compares to the initial one: Performance Metrics

The serverless solution scored a perfect 100% on Google's PageSpeed Insights, indicating a substantial enhancement in performance. This score reflects not just an improvement but an ideal state where every metric meets the highest standards. Such efficiency was observed even with the inherent latency of a cold start, showcasing the effectiveness of the serverless approach.

Real-world performance was notably impressive, with the server responding in less than a second in a network-constrained environment, and even faster on desktop (250ms). This starkly contrasts with the previous implementation and demonstrates the potential of serverless technology in delivering high-speed web content.

In real world terms, what that means was it took less than a second from the moment the request for the article went out, for the website to wake up, retrieve the content, render the website, return it to the client, and for the client to render it on their end. All in a network constrained environment. On desktop, it took 250ms. That is an outstanding _result over the original solution.

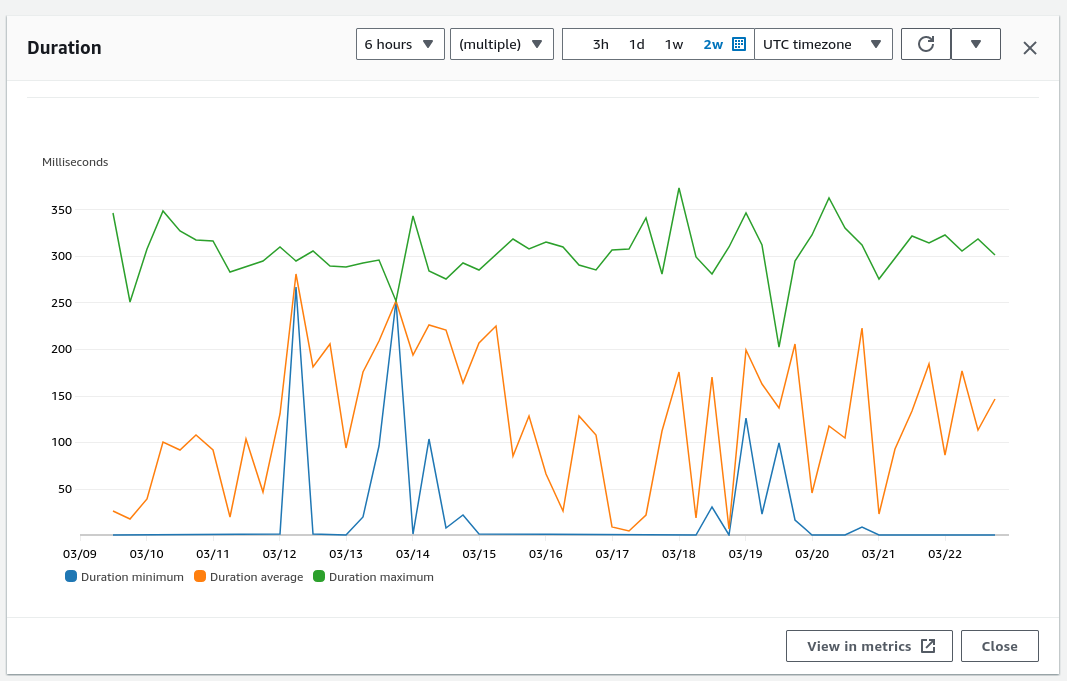

Internal performance assessments showed that rendering times were relatively quick, with peak performance seeing executions below 2ms. While network overhead from data retrieval remains a factor, the overall performance is robust and improves under load, indicating scalability and efficiency.

Reflection and Conclusion

The journey to achieving this serverless implementation was longer and more complex than anticipated, but the outcomes are highly satisfactory. While the approach may be overkill for a simple blog, the experiment provided valuable insights and showcased the capabilities of Rust in a serverless environment.

Would I recommend this approach to everyone? Probably not, as similar results might be achievable with simpler tools like static site generators combined with efficient hosting solutions. However, the experience was enriching, offering a chance to explore new patterns and learn more about Rust’s potential and limitations in web development.

Moving forward

While this post might be the last major discussion about the blog's backend, the journey doesn’t end here. Future enhancements, like RSS feed support and styling overhauls, are on the horizon. Beyond this, I aim to explore other underdiscussed topics and work on exciting projects, including an MMO Text Adventure. The goal is to continue producing concise, impactful content on niche yet valuable topics in the tech space.

This serverless journey not only optimized the blog but also opened avenues for further exploration and innovation in software development, especially within the Rust ecosystem.